AI in Action: Harnessing Artificial Intelligence for Clinical Skills Development

Trainee mental health practitioners are already using AI. Estimates suggest that 92% of university students now use AI, and that they recognise the importance of developing AI skills, yet only 36% receive institutional support to do so1.

Outside of their studies, mental health practitioner trainees are expected to deliver support across various modes, including text-based support, which is specific competency requiring development in the Psychological Wellbeing Practitioner (PWP) curriculum. However, many NHS services do not yet offer this mode to patients, limiting opportunities for trainees to develop these skills in-service. GenAI offers a potential solution by simulating realistic clinical interactions in a safe setting.

So how then do we support trainees to develop both their AI skills and clinical competencies?

How to set up an AI clinical skills development task

At the University of Exeter PWP training course, students are actively encouraged to use GenAI at two points within the course to develop their clinical skills: whilst practicing information gathering at assessment, and information giving within treatment, both through roleplays. These are optional tasks, and the alternative is to complete a roleplay with a real person.

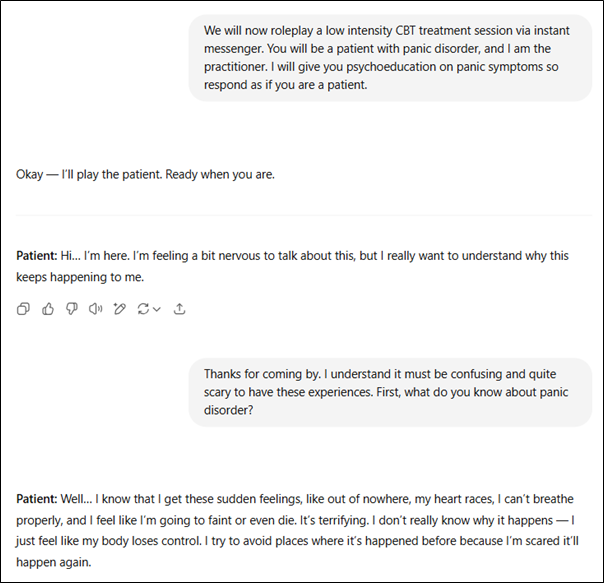

You’ll need to provide a prompt for trainees to put into a GenAI tool to keep the conversation on track. Usually, the more specific the prompt is, the better. We used something like this for the information giving treatment roleplay:

“We will now roleplay a low intensity CBT treatment session via instant messenger. You will be a patient with panic disorder, and I am the practitioner. I will give you psychoeducation on panic symptoms so respond as if you are a patient.”

Here’s an example of my conversation with ChatGPT using the above prompt:

- 2. Introducing the roleplay to ChatGPT

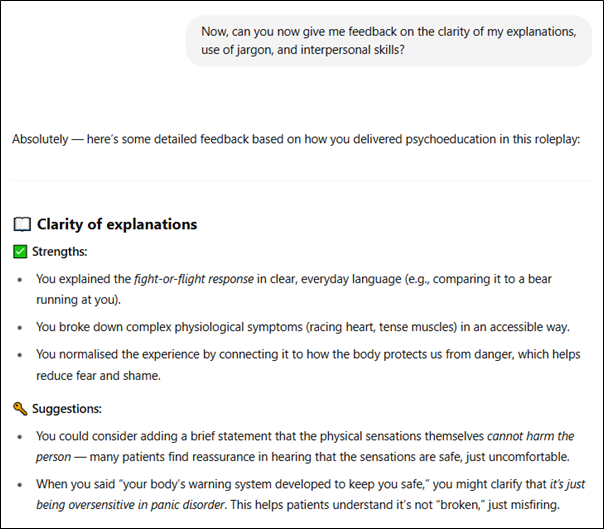

At the end of the roleplay, or at any point throughout, you can ask the AI to give you feedback on the messages, for example using the prompt: “Can you now give me feedback on the clarity of my explanations, use of jargon, and interpersonal skills?”

Here’s what that looked like after my roleplay with ChatGPT:

- 3. Asking ChatGPT for feedback on my roleplay

Trainees find it helpful (mostly)

This task has only been run with one cohort so far, and, as with many student feedback questionnaires, the response rate was low. However, key themes are already emerging:

- The AI sounds like a “proper patient”, giving realistic patient responses relevant to the conversation

- It mimicked real-life roleplays, helping practice session flow and questioning, and provides the same benefits of a non-judgmental space to make mistakes and learn

- The feedback provided is very helpful, sounding like a teacher or expert, and balancing positives with areas for improvement

- Students recognise its value to support skill development before real patient contact

As predicted, there are some issues:

- There can be minor glitches (e.g., repetitive answers, delays in answering some questions)

- Students can feel conflicted about using it due to their general apprehension about using AI

What’s the catch?

So, as we’ve seen, GenAI can be a useful tool but it does have many limitations. It cannot understand or feel emotions and lacks cultural competence2. It may produce inaccurate or misleading information, and it cannot comprehend the meaning behind its responses. While GenAI is a valuable supplementary tool for practising skills, it cannot and should not replace the true experience of working with real patients.

So, what now?

Aside from clinical skills development, using GenAI may also help students understand complex concepts, especially for those for whom English is a second language3, and can help with literature searches to inform evidence-based practice4. That’s a blog post for another time, since the scope of GenAI use in clinical education is huge. Watch this space?

Would you consider encouraging your trainees to practice their clinical skills using GenAI? Or do you know about trainees already using it in other ways to support their learning? Let’s keep the conversation going; I’d love to hear more about your clinical experience with GenAI. AI isn’t going anywhere and students are going to use it, so if you’re interested in using AI to support your trainees or have ideas about how this could be used in teaching, get in contact. You can find me on LinkedIn, or contact me directly on my university email

References:

1 Freeman, J. (2025). Student Generative AI Survey 2025. Higher Education Policy Institute. https://www.hepi.ac.uk/2025/02/26/student-generative-ai-survey-2025/#:~:text=In%202025%2C%20we%20find%20that,up%20from%2053%25%20in%202024.

3 Jisc. (2023). Student perceptions of generative AI. National centre for AI in tertiary education. National centre for AI in tertiary education: Student perceptions of generative AI report

4 Mansour, T., & Wong, J. (2024). Enhancing fieldwork readiness in occupational therapy students with generative AI. Frontiers in Medicine, 11, 1485325.

2 Wang, L., Bhanushali, T., Huang, Z., Yang, J., Badami, S., & Hightow-Weidman, L. (2025). Evaluating Generative AI in Mental Health: Systematic Review of Capabilities and Limitations. JMIR Mental Health, 12(1), e70014.

Special acknowledgement to Josh Cable-May, Clinical Lead at Limbic, for the inspiration to use AI to develop trainee clinical skills development.

Grace Riley is a Lecturer and Clinical Educator at the University of Exeter, teaching on the PGCert/GradCert Psychological Therapies Practice (Low Intensity Cognitive Behavioural Therapy) training course. She is a PWP by trade, and interested in digital developments in the PWP world, including the use of Artificial Intelligence within education and clinical practice.

Grace Riley is a Lecturer and Clinical Educator at the University of Exeter, teaching on the PGCert/GradCert Psychological Therapies Practice (Low Intensity Cognitive Behavioural Therapy) training course. She is a PWP by trade, and interested in digital developments in the PWP world, including the use of Artificial Intelligence within education and clinical practice.